Ⅰ. Redshift, Gsheet 연동하기

1. GCP 기본설정

구글 클라우드에 로그인한다.

https://console.cloud.google.com/welcome?project=programmers-devcourse-docker

Google 클라우드 플랫폼

로그인 Google 클라우드 플랫폼으로 이동

accounts.google.com

프로젝트를 생성한다.

API 및 서비스>사용자 인증 정보>사용자 인증 정보 만들기>서비스계정을 선택한다.

서비스 계정 이름 : Gsheet로 입력

이 서비스 계정에 프로젝트에 대한 액세스 권한 부여 : 편집자를 선택한다.

그다음 다시 API 및 서비스>사용자 인증 정보 페이지에서

앞서 만든 Gsheet 서비스계정의 이메일을 선택한다.

키>키추가>JSON 버튼을 눌러 새 키를 다운로드한다.

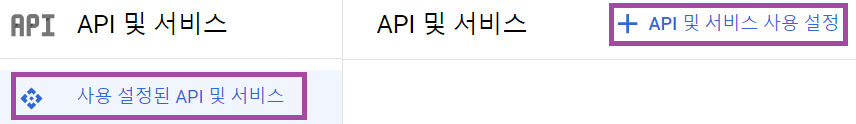

2개의 API를 허용한다.API 및 서비스>사용 설정된 API 및 서비스>API 및 서비스 사용 설정을 선택한다.

Google Sheets API 사용 버튼을 누른다.

Google Drive API를 활성화한다.

만약 해당 API를 활성화하지 않으면 다음과 같은 오류가 나타난다.

오류내용

"code": 403,

"message": "Access Not Configured. Drive API has not been used in project {your project} before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/drive.googleapis.com/overview?project={your project} then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry."

2. Airflow Variable 등록하기

key : google_sheet_access_token

Var : 1번에서 다운로드한 키 내용

을 입력한다.

Ⅱ. Gsheet내용 Redshfit 테이블로 저장하기

1. DAG 만들기 : Gsheet_to_Redshift

해당 DAG는 세 개의 task로 구성되어 있다.

- download_tab_in_gsheet (PythonOperator) : gsheet 특정 tab에 있는 정보를 csv파일로 다운로드

download_tab_in_gsheet = PythonOperator(

task_id = 'download_{}_in_gsheet'.format(sheet["table"]),

python_callable = download_tab_in_gsheet,

params = sheet,

dag = dag)

def download_tab_in_gsheet(**context):

url = context["params"]["url"]

tab = context["params"]["tab"]

table = context["params"]["table"]

data_dir = Variable.get("DATA_DIR")

gsheet.get_google_sheet_to_csv(

url,

tab,

data_dir+'{}.csv'.format(table)

)- copy_to_s3 (PythonOperator) : csv파일을 AWS S3에 저장

copy_to_s3 = PythonOperator(

task_id = 'copy_{}_to_s3'.format(sheet["table"]),

python_callable = copy_to_s3,

params = {

"table": sheet["table"],

"s3_key": s3_key

},

dag = dag)

def copy_to_s3(**context):

table = context["params"]["table"]

s3_key = context["params"]["s3_key"]

s3_conn_id = "aws_conn_id"

s3_bucket = "grepp-data-engineering"

data_dir = Variable.get("DATA_DIR")

local_files_to_upload = [ data_dir+'{}.csv'.format(table) ]

replace = True

s3.upload_to_s3(s3_conn_id, s3_bucket, s3_key, local_files_to_upload, replace)

- run_copy_sql (S3ToRedshiftOperator) : AWS S3에 저장된 csv파일 Redshift에 벌크 업데이트(COPY)

S3ToRedshiftOperator(

task_id = 'run_copy_sql_{}'.format(sheet["table"]),

s3_bucket = "grepp-data-engineering",

s3_key = s3_key,

schema = sheet["schema"],

table = sheet["table"],

copy_options=['csv', 'IGNOREHEADER 1'],

method = 'REPLACE',

redshift_conn_id = "redshift_dev_db",

aws_conn_id = 'aws_conn_id',

dag = dag

)

Ⅲ. Redshift 테이블 내용 GSheet에 반영하기

1. DAG 만들기 : SQL_to_Sheet

해당 DAG는 하나의 task로 구성되어 있다.

- update_sql_to_sheet1 : Redshift 테이블의 정보를 sql을 이용해서 추출 한 뒤, GSheet에 반영하는 task이다.

sheet_update = PythonOperator(

dag=dag,

task_id='update_sql_to_sheet1',

python_callable=update_gsheet,

params = {

"sql": "SELECT * FROM analytics.nps_summary",

"sheetfilename": "spreadsheet-copy-testing",

"sheetgid": "RedshiftToSheet"

}

)

def update_gsheet(**context):

sql = context["params"]["sql"]

sheetfilename = context["params"]["sheetfilename"]

sheetgid = context["params"]["sheetgid"]

gsheet.update_sheet(sheetfilename, sheetgid, sql, "redshift_dev_db")

Ⅳ. Airflow API와 모니터링

API를 활용하여 Airflow에 대한 내용을 얻을 수 있다.

1. Airflow API 활성화

airflow.cfg

[api]

auth_backend = airflow.api.auth.backend.basic_auth

만약, yaml파일을 수정해서 Docker compose up 할 때 cfg의 설정을 Override 하려면 다음과 같은 방법을 사용하면 된다.

docker-compose.yaml

AIRFLOW__API__AUTH_BACKENDS: 'airflow.api.auth.backend.basic_auth,airflow.api.auth.backend.session'

제대로 설정되었는지 확인해 보자.

docker exec -it learn-airflow-airflow-scheduler-1 airflow config get-value api auth_backend

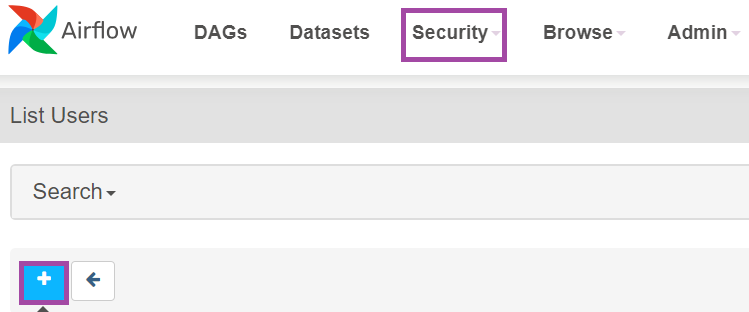

2. 보안을 위해 Airflow에서 새로운 사용자를 생성

airflow 사용자는 모든 권한에 대해 허용되어 있으므로 노출되면 자칫 문제가 생길 수 있다.

따라서 더 적은 권한을 가지는 모니터링을 위한 새로운 사용자를 생성해 보자.

Airflow Web UI >Security>List Usert>+ 버튼을 선택한다.

3. Airflow API를 호출하기

참고 문서

Airflow REST API

airflow.apache.org

1) 특정 DAG를 API로 Trigger 하기

curl -X POST --user "id입력:password입력" -H 'Content-Type: application/json' -d

'{"execution_date":"2023-05-24T00:00:00Z"}'

"http://localhost:8080/api/v1/dags/dag이름/dagRuns"

결과 : 이미 성공적으로 실행되었던 DAG

{

"detail": "DAGRun with DAG ID: 'HelloWorld' and DAGRun logical date: '2023-05-24 00:00:00+00:00'

already exists",

"status": 409,

"title": "Conflict",

"type":

"https://airflow.apache.org/docs/apache-airflow/2.5.1/stable-rest-api-ref.html#section/Errors/AlreadyExists"

}

2) 모든 DAG 리스트 하기

curl -X GET --user "id입력:password입력" http://localhost:8080/api/v1/dags

결과 : 모든 DAG에 대한 정보를 알려준다.

{

"dag_id": "SQL_to_Sheet",

"default_view": "grid",

"description": null,

"file_token": "...",

"fileloc": "/opt/airflow/dags/SQL_to_Sheet.py",

"has_import_errors": false,

"has_task_concurrency_limits": false,

"is_active": true,

"is_paused": true,

"is_subdag": false,

"last_expired": null,

"last_parsed_time": "2023-06-18T05:21:34.266335+00:00",

"last_pickled": null,

"max_active_runs": 16,

"max_active_tasks": 16,

"next_dagrun": "2022-06-18T00:00:00+00:00",

"next_dagrun_create_after": "2022-06-18T00:00:00+00:00",

"next_dagrun_data_interval_end": "2022-06-18T00:00:00",

"next_dagrun_data_interval_start": "2022-06-18T00:00:00",

"owners": [ "airflow" ],

"pickle_id": null,

"root_dag_id": null,

"schedule_interval": {

"__type": "CronExpression",

"value": "@once"

},

"scheduler_lock": null,

"tags": [ { "name": "example" }],

"timetable_description": "Once, as soon as possible"

}

3) 모든 Variable 리스트 하기

curl -X GET --user "id입력:password입력" http://localhost:8080/api/v1/variables

결과 : 모든 Variable에 대한 정보를 알려준다.

{

"total_entries": 7,

"variables": [

{

"description": null,

"key": "api_token",

"value": "12345667"

},

{

"description": null,

"key": "csv_url",

"value": "https://s3-geospatial.s3-us-west-2.amazonaws.com/name_gender.csv"

}4) 모든 Config 리스트 하기

curl -X GET --user "airflow:airflow" http://localhost:8080/api/v1/config

결과 : 모든 Config 정보를 알려주지 않고 오류가 발생한다.(보안문제)

{

"detail": "Your Airflow administrator chose not to expose the configuration, most likely for security

reasons.",

"status": 403,

"title": "Forbidden",

"type":

"https://airflow.apache.org/docs/apache-airflow/2.5.1/stable-rest-api-ref.html#section/Errors/PermissionDe

nied"

}

Q : Config에 대한 정보는 기본으로 막혀 있다. 어떻게 풀어줄 수 있을까?

5) airflow health 정보 호출하기

/health API : Airflow의 metadb, scheduler에 대한 정보를 나타낸다.

curl -X GET --user "monitor:MonitorUser1" http://localhost:8080/health

결과

{"metadatabase": {"status": "healthy"},

"scheduler": {"latest_scheduler_heartbeat": "2024-01-04T15:45:24.935355+00:00", "status": "healthy"}}

5. cmd에서 Variable, Connection 정보 설정 및 가져오기(json 이용)

단점) 환경변수로 등록이 된 Variable, Connection정보는 알 수 없다.

AIRFLOW_HOME\variables.json

AIRFLOW_HOME\connections.json 위치에 저장된다.

airflow variables export variables.json

airflow variables import variables.json

airflow connections export connections.json

airflow connections import connections.json

Ⅴ. 숙제

1. 활성화되어있는 DAGS 목록을 찾는 파이썬 코드 작성하기

get_active_dags.py

import requests

from requests.auth import HTTPBasicAuth

url = "http://localhost:8080/api/v1/dags"

dags = requests.get(url, auth=HTTPBasicAuth("airflow", "airflow"))

for d in dags.json()["dags"]:

if not d["is_paused"]:

print(d["dag_id"])

2. config api 보안 문제 해결하기

1) airflow.cfg

[webserver]

expose_config="True"

2) docker-compose.yaml : up 할 때 cfg expose_config 변수를 true로 덮어쓰기 실행.

x-airflow-common:

&airflow-common

…

environment:

&airflow-common-env

AIRFLOW__WEBSERVER__EXPOSE_CONFIG: 'true'

3. variables API는 환경변수로 지정된 것도 리턴하는지 확인

curl -X GET --user "airflow:airflow" http://localhost:8080/api/v1/variables

yaml 파일에서 환경변수로 지정된 것은 리턴하지 않는다.

'데브코스_데이터엔지니어링' 카테고리의 다른 글

| [Week15 스트리밍 데이터 처리 : Kafka] TIL 63일차 Docker를 이용한 Kafka 환경 구축(Feat. Google Cloud) (0) | 2024.01.25 |

|---|---|

| [Week10 Airflow] TIL 44일차 Airflow DAG, task 실습과 고도화 (0) | 2023.12.15 |

| [Week10 Airflow] TIL 43일차 Airflow DAG, task의 정의와 사용 (0) | 2023.12.15 |

| [Week10 Airflow] TIL 42일차 Airflow 사용하기 및 초기 설정 (0) | 2023.12.12 |

| [Week8 AWS 데이터웨어하우스] TIL 34일차 Snowflake 사용하기 (0) | 2023.12.01 |